Microsoft has apologised for racist and sexist messages generated by its Twitter chatbot. The bot, called Tay, was launched as an experiment to learn more about how artificial intelligence programs can engage with internet users in casual conversation. The programme had been designed to mimic the words of a teenage girl, but it quickly learned to imitate offensive words that Twitter users started feeding it. Microsoft was forced to take Tay offline just a day after it launched. In a blog post, the company said it takes "full responsibility for not seeing this possibility ahead of time."

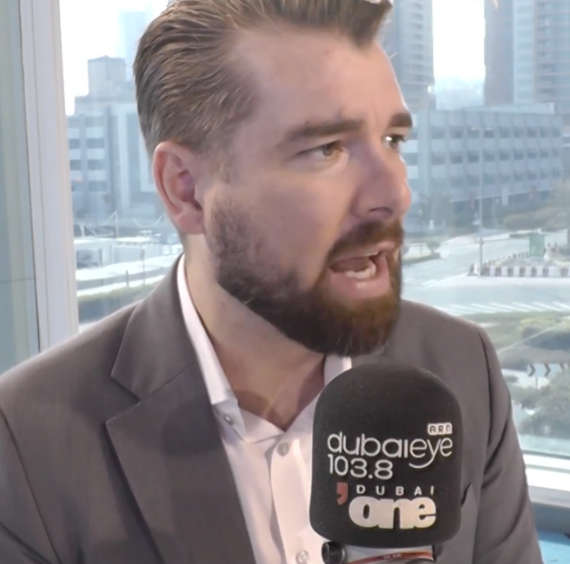

Former Binance chief guilty of money laundering

Former Binance chief guilty of money laundering

Emirates attracts pilots with higher salaries, new perks

Emirates attracts pilots with higher salaries, new perks

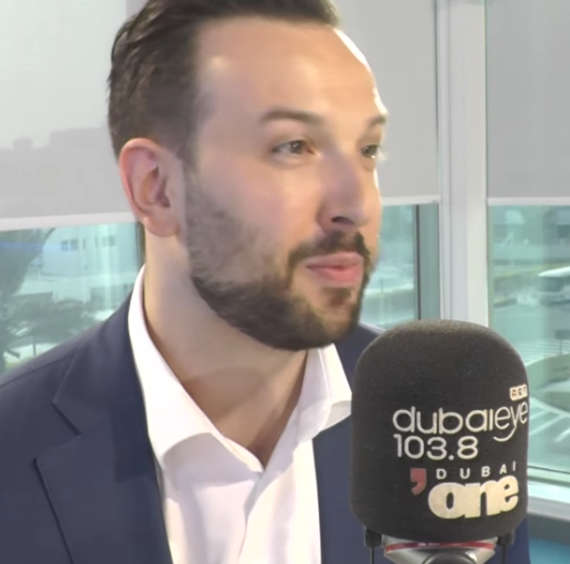

Spinneys increases IPO retail offering due to high demand

Spinneys increases IPO retail offering due to high demand

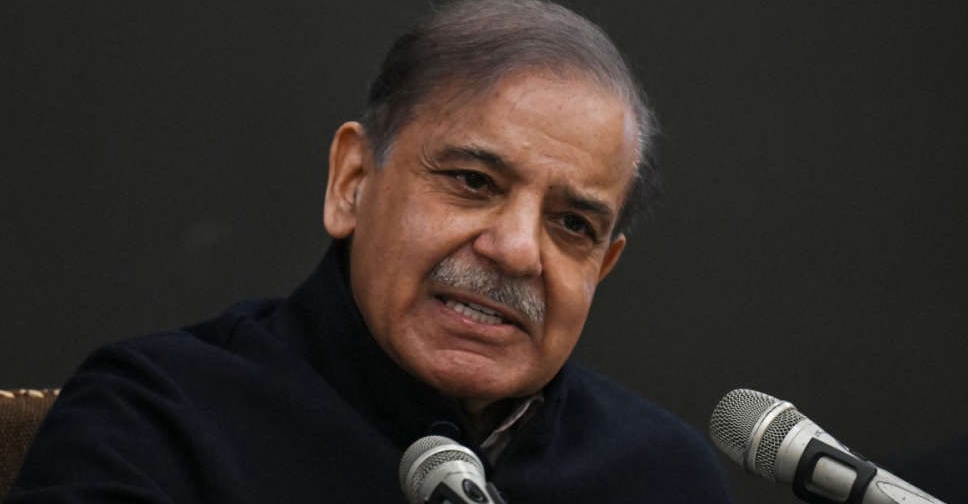

IMF $1.1 billion tranche to help Pakistan's economic stability, says PM Sharif

IMF $1.1 billion tranche to help Pakistan's economic stability, says PM Sharif

UAE, Ukraine conclude terms of trade pact

UAE, Ukraine conclude terms of trade pact